POET: Training Neural Networks on Tiny Devices

with Integrated Rematerialization and Paging

Shishir G. Patil, Paras Jain, Prabal Dutta, Ion Stoica, Joseph Gonzalez

UC Berkeley

Train BERT and other large models on smartphones

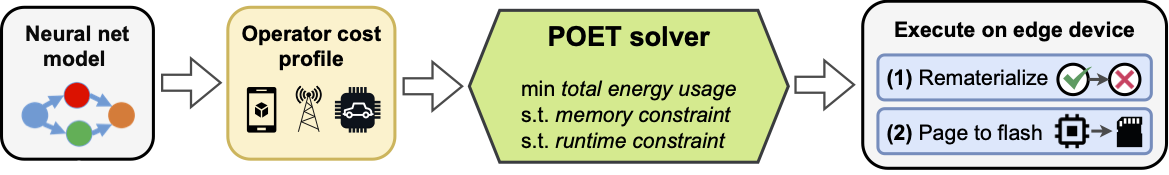

POET optimizes state-of-the-art ML models for training on Edge devices. Operators of the ML model

are profiled on target edge device to obtain fine-grained profiles. POET adopts an integrated integrated

rematerialization and paging to produce an energy-optimal training schedule.

POET optimizes state-of-the-art ML models for training on Edge devices. Operators of the ML model

are profiled on target edge device to obtain fine-grained profiles. POET adopts an integrated integrated

rematerialization and paging to produce an energy-optimal training schedule.